- India

- International

Why Elon Musk and Steve Wozniak have said AI can ‘pose profound risks to society and humanity’

In a recent open letter, tech researchers and CEOs spoke of an immediate pause on the training of AI systems like ChatGPT.

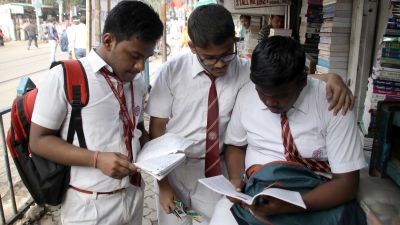

A six-month pause has been suggested, on training systems more powerful than GPT-4 – the latest development in OpenAI's ChatGPT that can now process image-related queries too. (Photo: Pixabay and AP)

A six-month pause has been suggested, on training systems more powerful than GPT-4 – the latest development in OpenAI's ChatGPT that can now process image-related queries too. (Photo: Pixabay and AP) Twitter owner and entrepreneur Elon Musk and Apple co-founder Steve Wozniak are among the high-profile names in an open letter, signed to urge halting the rollout of artificial intelligence-powered tools like ChatGPT.

Last Wednesday, a letter titled ‘Pause Giant AI Experiments: An Open Letter’ was posted on the website of the Future of Life (FLI) Institute. It said, “We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

What are the concerns raised here, and what have these individuals proposed, even as AI continues developing into better and more efficient error-free models? We explain.

What is ChatGPT and what does the open letter say about it?

A chatbot is a computer programme that can have conversations with a person and has become a common part of websites in the last few years. On websites like Amazon and Flipkart, chatbots help people raise requests for returns and refunds. But a much more advanced version came about when OpenAI, a US-based AI research laboratory, created the chatbot ChatGPT last year.

According to OpenAI’s description, ChatGPT can answer “follow-up questions” and can also “admit its mistakes, challenge incorrect premises, and reject inappropriate requests.” It is based on the company’s GPT 3.5 series of language learning models (LLM). GPT stands for Generative Pre-trained Transformer 3 and this is a kind of computer language model that relies on deep learning techniques to produce human-like text based on inputs.

With Artificial Intelligence or AI, instead of human programmers having to feed specific inputs into a programme, big amounts of data are fed into a system and then the programme uses that information to train itself into understanding information in a meaningful manner.

The development of ChatGPT has been heralded as a new stage of technological development that promises to revolutionise how people seek out information, much like how search engines such as Google did earlier.

While there is no consensus on how true these claims turn out to be in the future and whether it is all that it is promised to be right now, the technical efficiency of ChatGPt has received almost universal appreciation. It has been able to clear the US bar exam (for qualifying as a lawyer) and entrance exams to prominent institutions, like the Wharton School of business.

And what is the criticism raised here?

The letter was posted on the website of FLI, which describes its work as engaging in grantmaking, policy research and advocacy on areas such as AI. Researchers, tech CEOs and other notable figures have signed the letter, such as Jaan Tallinn, Co-Founder of Skype, Craig Peters, CEO, Getty Images and Yuval Noah Harari, Author and Professor at the Hebrew University of Jerusalem.

The letter points out that AI systems now have human-competitive intelligence. The authors believe this “could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.” They claim that this level of planning and management is not happening, even as an “out-of-control race” has been on to develop new “digital minds” that not even their creators can understand or predict.

A range of questions are also asked here, such as “Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?” The letter adds that such decisions must not be delegated to “unelected tech leaders”.

So what do they suggest then?

A six-month pause has been suggested, on training systems more powerful than GPT-4 – the latest development in ChatGPT that can now process image-related queries too. “This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium”, they say.

They say companies must develop a set of shared safety protocols for advanced AI design and development at this time that can be overseen by independent outside experts.

All in all, a proper framework with a legal structure and foolproofing is proposed, including watermarking systems “to help distinguish real from synthetic”, liability for AI-caused harm, robust public funding for technical AI safety research, etc.

Has OpenAI or other AI labs responded to the letter?

In the past, OpenAI has similarly used cautionary language to talk about AI and its impact. It noted in a 2023 post, “As we create successively more powerful systems, we want to deploy them and gain experience with operating them in the real world. We believe this is the best way to carefully steward AGI [Artificial General Intelligence] into existence—a gradual transition to a world with AGI is better than a sudden one. We expect powerful AI to make the rate of progress in the world much faster, and we think it’s better to adjust to this incrementally.”

But it did not respond to the letter immediately. Notably, Musk was one of the initial funders of OpenAI in 2015, but he eventually stepped away from it claiming a conflict of interest as Tesla was also looking at AI.

James Grimmelmann, a Cornell University professor of digital and information law told the AP that a letter signalling potential dangers might be a good idea, but noted “It is also deeply hypocritical for Elon Musk to sign on given how hard Tesla has fought against accountability for the defective AI in its self-driving cars.”

More Explained

EXPRESS OPINION

Apr 20: Latest News

- 01

- 02

- 03

- 04

- 05